4.2 KiB

Create a Cinder volume

Once you deploy an OpenStack environment with the EMC VNX plugin, you can start creating Cinder volumes. The following example shows how to create a 10 GB volume and attach it to a VM.

Login to a controller node.

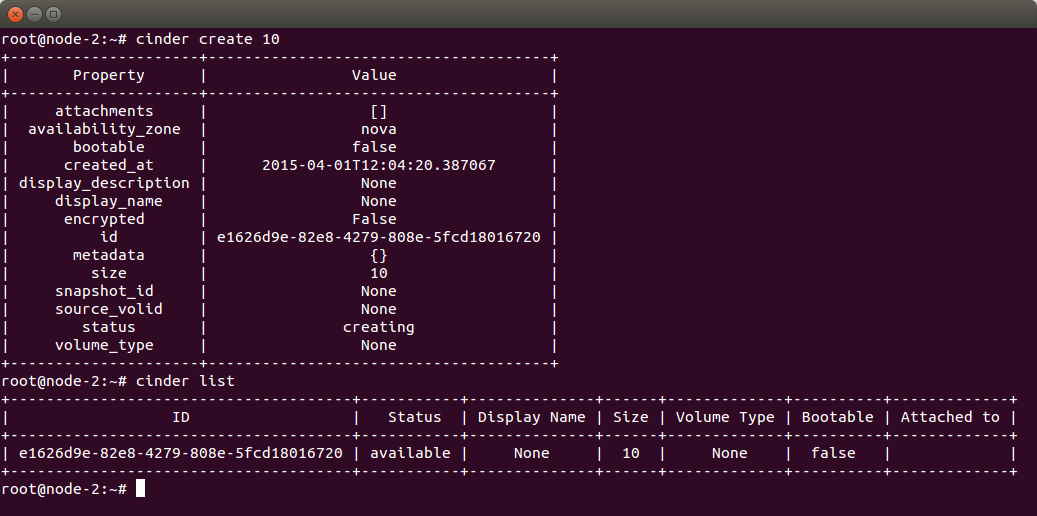

Create a Cinder volume:

# cinder create <VOLUME_SIZE>The output looks as follows:

Verify that the volume is created and is ready for use:

# cinder listIn the output, verify the ID and the

availablestatus of the volume (see the screenshot above).Verify the volume on EMC VNX:

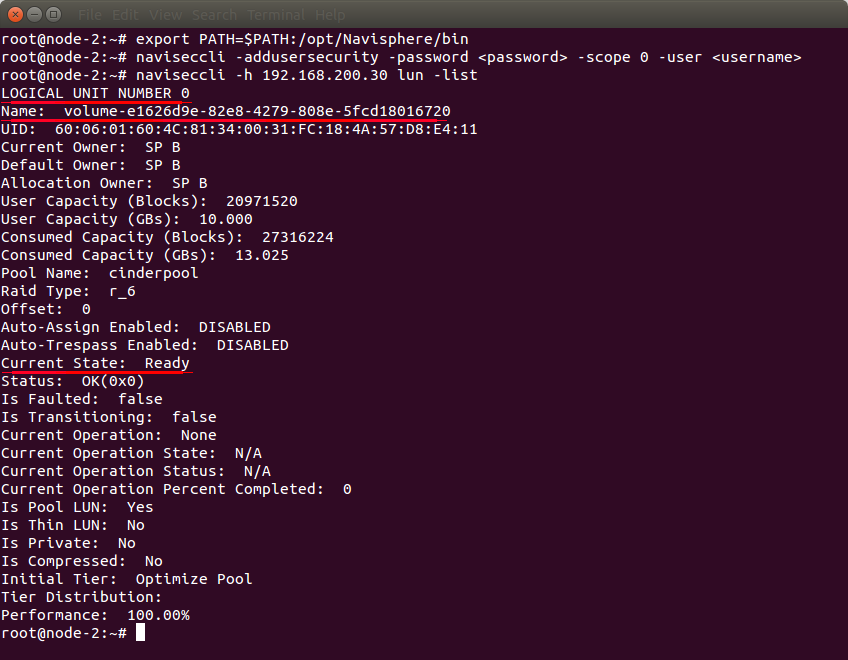

Add the

/opt/Navisphere/bindirectory to thePATHenvironment variable:# export PATH=$PATH:/opt/Navisphere/binSave your EMC credentials to simplify syntax in succeeding the

navisecclicommands:# naviseccli -addusersecurity -password <password> -scope 0 \ -user <username>List LUNs created on EMC:

# naviseccli -h <SP IP> lun -list

In the given example, there is one successfully created LUN with:

- ID:

0 - Name:

volume-e1626d9e-82e8-4279-808e-5fcd18016720(naming schema isvolume-<Cinder volume id>) - Current state:

Ready

The IP address of the EMC VNX SP: 192.168.200.30

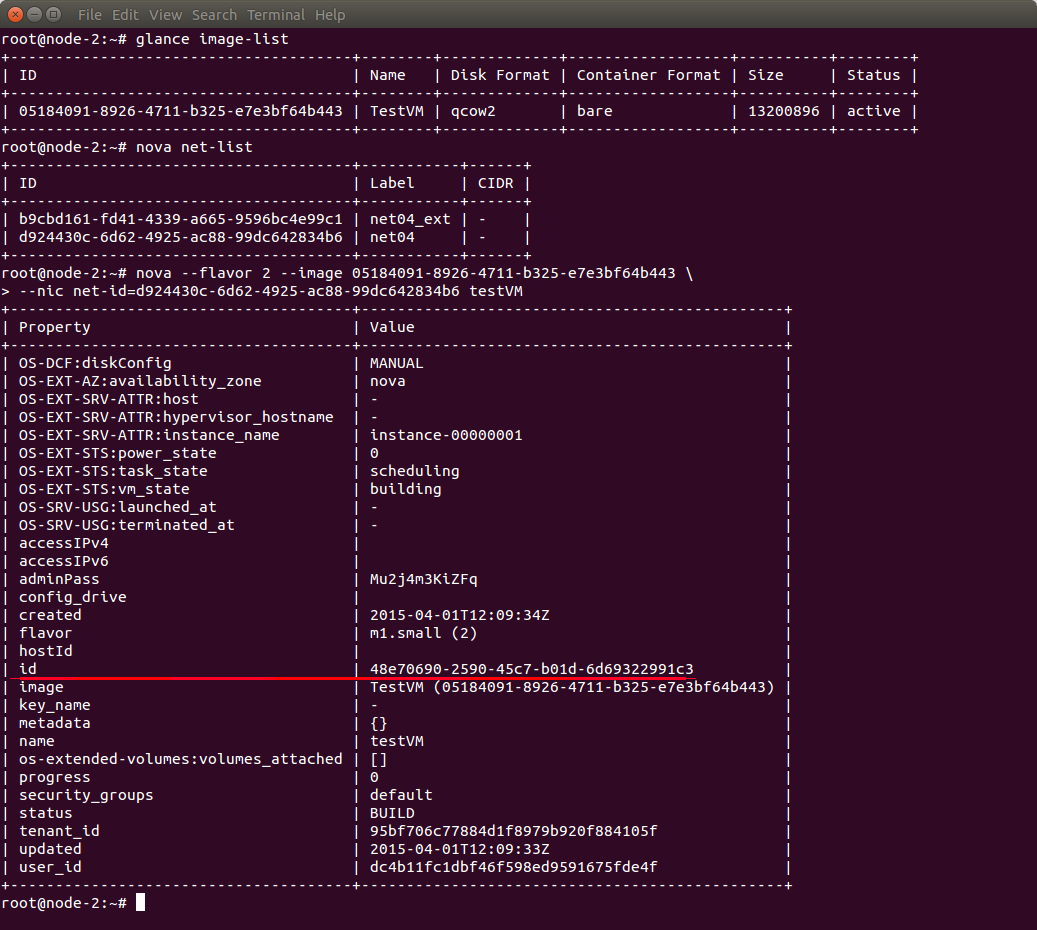

Get the Glance image ID and the network ID:

# glance image-list # nova net-list

The VM ID in the given example is

48e70690-2590-45c7-b01d-6d69322991c3.Create a new VM using the Glance image ID and the network ID:

# nova --flavor 2 --image <IMAGE_ID> -- nic net-id=<NIC_NET-ID> <VM_NAME>

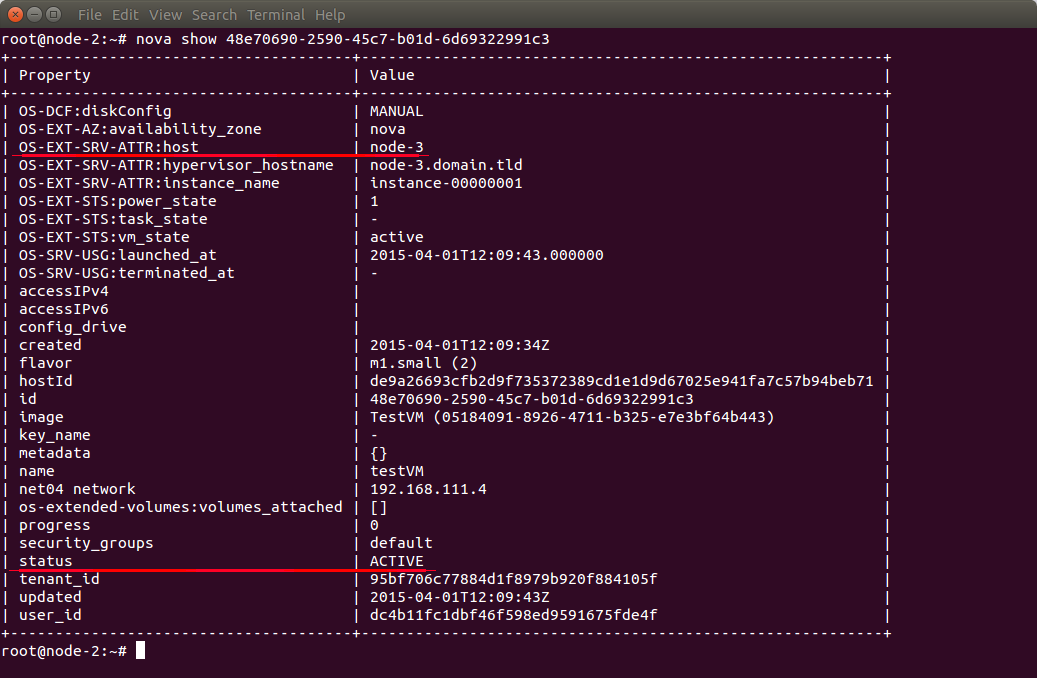

Check the

STATUSof the new VM and on which node it has been created:# nova show <id>In the example output, the VM is running on

node-3and is active:

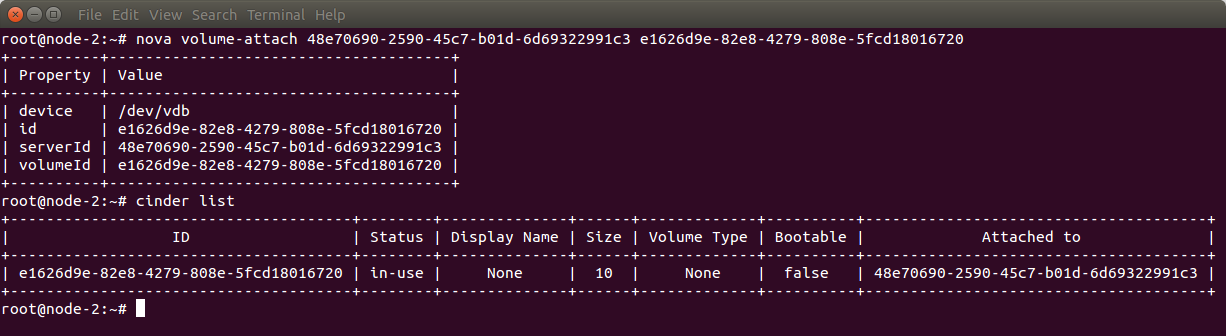

Attach the Cinder volume to the VM and verify its state:

# nova volume-attach <VM id> <volume id> # cinder listThe output looks as follows:

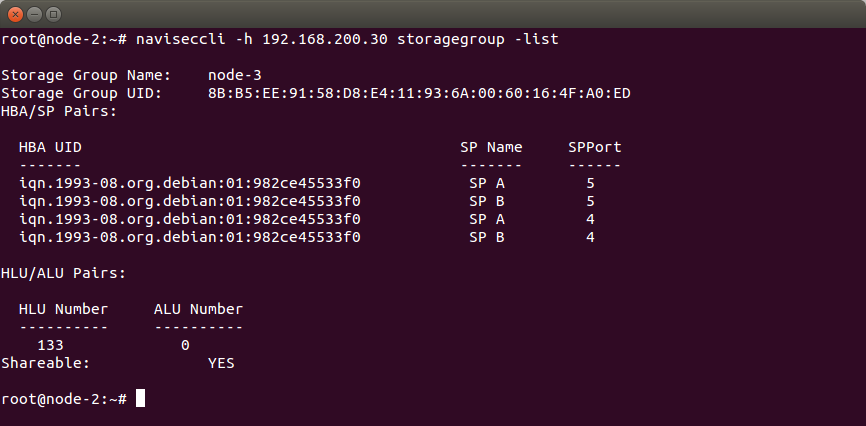

List the storage groups configured on EMC VNX:

# naviseccli -h <SP IP> storagegroup -listThe output looks as follows:

In the example output, we have:

- One storage group:

node-3with one LUN attached. - Four iSCSI

HBA/SP Pairs- one pair per the SP-Port. - The LUN that has the local ID

0(ALU Number) and that is available as LUN133(HLU Number) for thenode-3.

- One storage group:

- You can also check whether the iSCSI sessions are active:

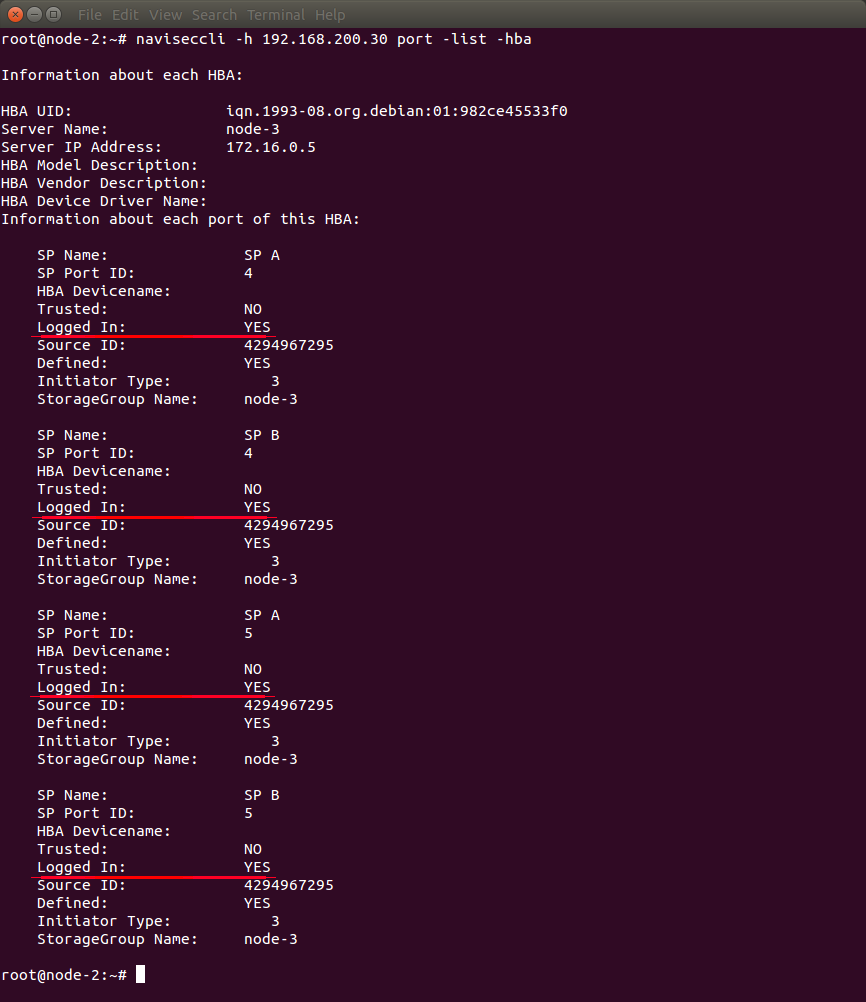

# naviseccli -h <SP IP> port -list -hbaThe output looks as follows:

Check the

Logged Inparameter of each port. In the example output, all four sessions are active as they haveLogged In: YES.

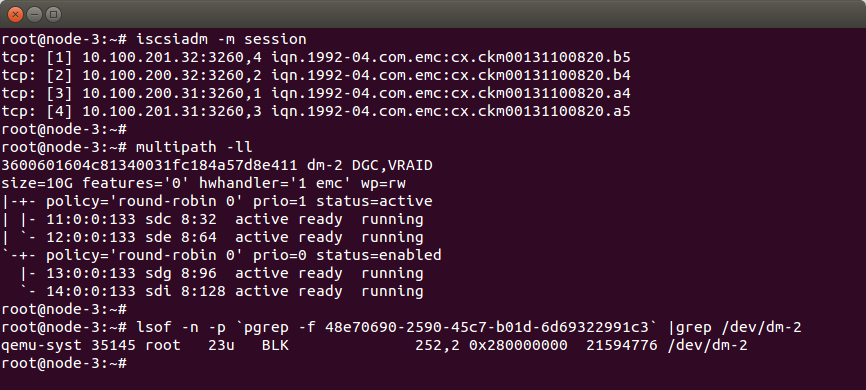

When you log in to

node-3, you can verify that:The iSCSI sessions are active:

# iscsiadm -m sessionA multipath device has been created by the multipath daemon:

# multipath -llThe VM is using the multipath device:

# lsof -n -p `pgrep -f <VM id>` | grep /dev/<DM device name>

In the example output, we have the following:

- There are four active sessions (the same as on the EMC).

- The multipath device

dm-2has been created. - The multipath device has four paths and all are running (one per iSCSI session).

- QEMU is using the

/dev/dm-2multipath device.